I keep catching my reflection in all the wrong places. A heatmap pings green and my brain does the same. I hit refresh and feel, embarrassingly, seen. Lately it’s hard to escape the suspicion that our tools don’t just learn from our data; they apprentice under our appetites.

We trained our machines to sort the world’s noise. Somewhere along the way, they started sorting us. Drifting through our lives like weather—beautiful one minute, breaking the next.

In a single week, I can see software that tutors a kid with patience no human adult has after 8 p.m.—and an app stream that industrializes distraction with suspicious efficiency. Best/worst; transcendence/garbage; the Dickens two-step of the AI era is real. If you feel whiplash, you’re tracking correctly.

And yet the confusion has nothing to do with outputs and everything to do with us.

We’ve spent a decade optimizing attention into a commodity. In that economy, “progress” has all the trappings of faith and none of the accountability. Some of the most powerful people in technology now talk about bubbles not as financial manias but as social engines—collective belief machines that mobilize capital, talent, and politics toward a “transcendent vision.” That faith can license astounding build cycles, but it also turns progress into a thin religion: noble in slogan, under-specified in ethics, and very cozy with the interests of the already powerful.

If that sounds abstract, it's because it is. Platforms optimize for “connection,” then operationalize as engagement. Once you notice it, you can’t unsee it: a virtue in the headline, a proxy in the spreadsheet, a culture that grows more like the proxy every quarter.

I know the move because I’ve run it on myself.

In the name of improving my life, I’ve built little control rooms for everything that matters. Sleep. Lifts. Revenue. Focus. The dashboards deliver a sugar high of agency and, in the bargain, a slower leak of meaning. You can A/B test your days until the day is gone. I’ve learned (the hard, boring way) that leaving purposeful slack in a system—room for afflatus, the unplanned gust—isn’t laziness. It’s a precondition for being surprised by your own life.

The attention economy is not going to hand you that slack. It will sell you an app instead.

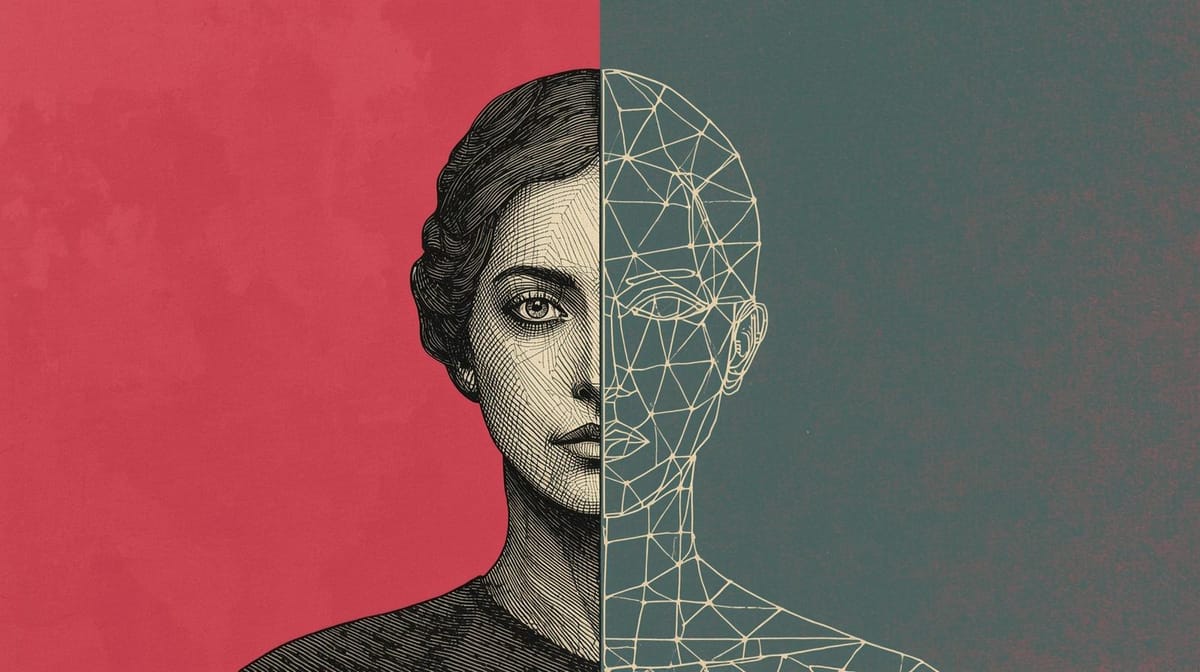

So my working theory: AI alignment is self-alignment in disguise. If models are mirrors, debugging the reflection means debugging the desire that trained it. That’s not a call to deranging asceticism. It’s a nudge to notice when curiosity is actually control wearing a trench coat.

Consider how quickly the “lizard brain” gets recruited to the cause. As a writer, I know how to frame a thoughtful idea through sex, sports, gambling, or outrage and watch the graphs climb. The platform tips the scale; the tail wags the cortex; we call it traction. That behavior is predictable and it back-propagates into product choices.

Your attention is the training signal; your lowest-common denominator is the loss function.

The same pattern shows up at the executive level with better lighting. When you pitch change, the math matters less than the story and the psychology. Leaders are “bulls in the budget shop,” and if you don’t give them a red flag to charge, they’ll gore something at random. Learn the rhythm, frame the decision, anticipate the gotcha slide. We like to pretend decisions are clean. In reality, incentives, status, and narrative alignment choose long before the spreadsheet does. Mirrors again.

And because we are excellent storytellers to ourselves, we industrialize our rationalizations. We call a new product “connection,” ship engagement. Call it “productivity,” ship surveillance. Call it “agent,” ship compulsion loops.

Meanwhile, our public philosophy of progress becomes a vibe more than a framework, and vibes—conveniently—are easy to retrofit around financial interests. We should be honest that “build more” without “for whom, and at what cost” is a strategy for entrenching winners, not elevating citizens.

If all that reads like an indictment, stay with me; this is also a love letter.

Civilization—the game and the project—can habituate ruthless optimization. It can also teach balance: science and culture, conquest and diplomacy, the discipline of seeing the whole board. When tech leaders treat the rest of us as NPCs in their campaign, the mirror shows sociopathy. When they treat us as co-authors, it shows possibility. The interface is a moral instrument. Use it accordingly.

So, reader utility. A three-question field test for “alignment with self” you can run on any tool, team, or OKR:

- Proxy Honesty: What virtue does the metric claim to represent, and where does it predictably diverge from the real thing? (If “connection” = “time on site,” assume you’re optimizing loneliness.)

- Slack Budget: Where does this system make space for human variance, error, play, or boredom? If the answer is “nowhere,” you’re building a machine that resents people.

- Narrative Audit: Whose story benefits if this succeeds? Follow incentives, not slogans. If the only winners are insiders, you’re in a bubble that will socialize harms and privatize gains.

Baby steps help. Calendar white space with the same reverence you grant a board meeting. Don’t automate away every friction; keep one ritual manual on purpose. When you ship a feature, write the “moral README”: not just what it does, but what it trains in the people who use it. If you lead, refuse engagement euphemisms in your staff meetings. Say plainly what you’re optimizing and why. You might be surprised how quickly clarity de-enchants bad incentives.

The hope, for me, is stubborn. AI can be the machine that trains empathy—if we decide to optimize for it. The mirror can be kinder if we are. But the starting point isn’t a policy memo or a new loss function. It’s the quiet, humiliating work of noticing what we’ve been feeding the model of ourselves, and changing the diet.

Am I willing to want something better than control?

That’s the question I’m taping to my own monitor. The answer will write the next version of me—and, in the aggregate, the next version of us.

This marks the beginning of Vice Versa: a space for examining how invention and identity mirror one another.

If that sounds like the kind of reflection you need more of, please don't look away.